As AI tools such as ChatGPT and Gemini become more powerful, organizations must develop AI usage policy guidelines for employees. We explore what companies are doing and what to include in your AI AUP template.

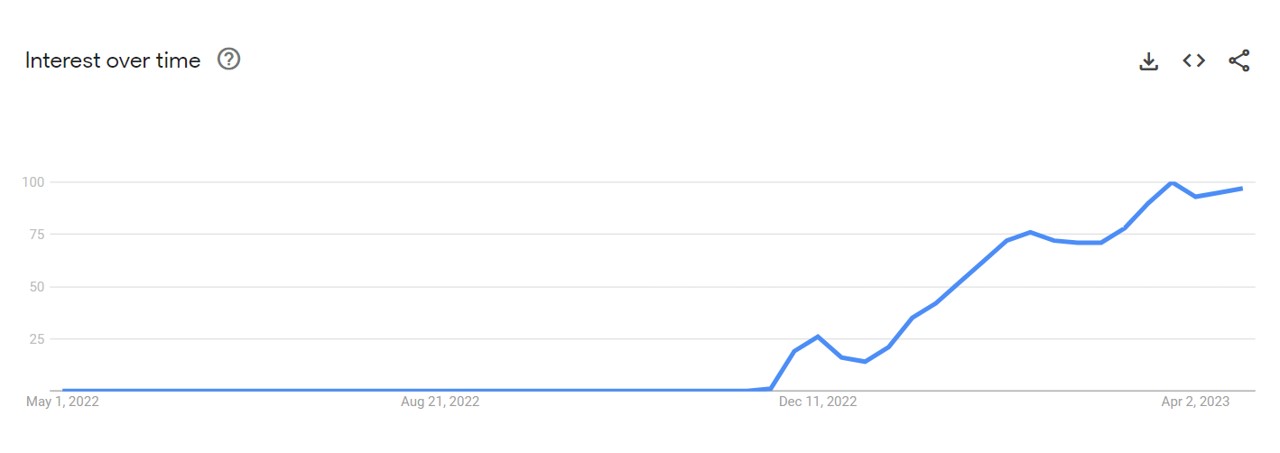

Despite predictions (and Meta’s $30+ billion gamble) that the metaverse would be the next digital horizon we crossed, it is AI tools that have captured the world’s imagination.

In December 2022, OpenAI’s ChatGPT tool went, virtually overnight, from something we’d never heard of to being more in-demand than Furbys in the 1990s.

As you probably know, ChatGPT (and now Google’s Gemini tool) is an example of generative AI.

Generative AI is a field of artificial intelligence that makes it possible to create new content from existing materials.

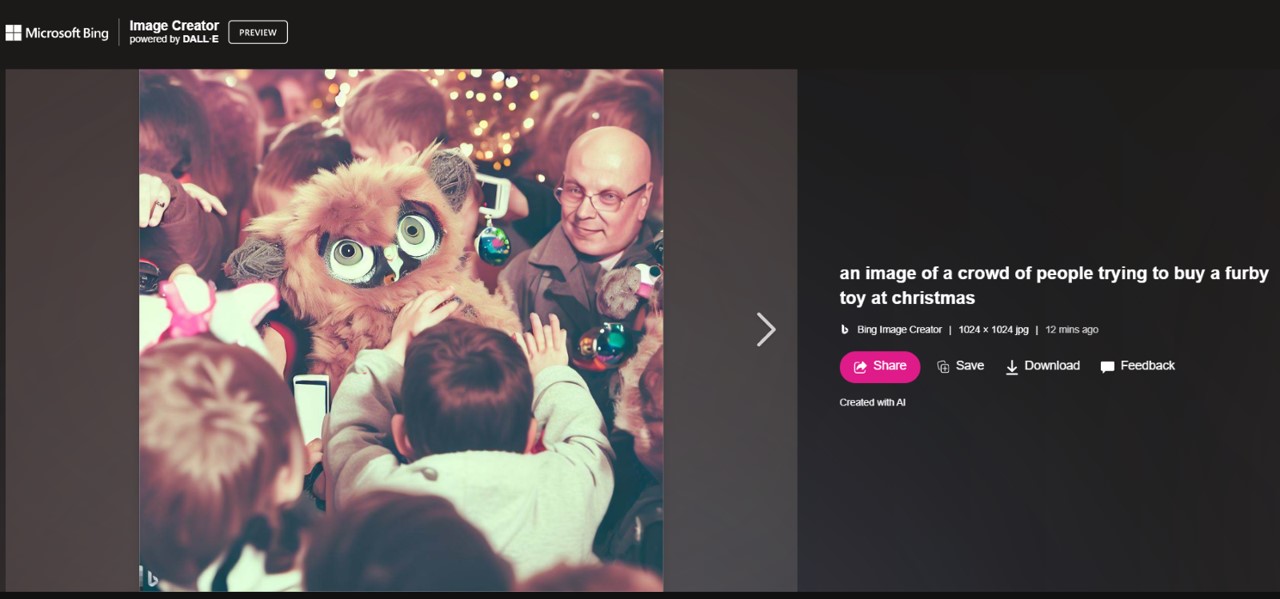

Tools such as DALL-E2, for example, use word-based prompts to generate new images.

Bing Image Creator conjured up an image of a Furby that scares me.

In the case of many generative art tools, powerful software scrapes thousands of images and text-to-image pairings. It then uses this information to generate new pictures.

Words, music, data, and video are all generated using similar models. In each case, the AI generates “new” content from that which already exists.

The Insider’s Guide to Internal Comms Technology

Leaving aside questions about intellectual property rights and worker displacement, the potential is clear.

How employees are already using generative AI

ChatGPT alone has amassed over 100 million users – many of them using it to support their work.

I asked ChatGPT for five common work-related prompts. Here’s what it revealed about individual use cases:

1. Writing assistance – People often ask me to help them with writing tasks such as creating content for their website, drafting emails, or even writing essays.

2. Research – Many people ask me to help them with research tasks such as finding information on a particular topic or compiling data from multiple sources.

3. Translation – People often ask me to help them with translation tasks, such as translating a document or conversation from one language to another.

4. Data analysis – People often ask me to help them with data analysis tasks, such as creating charts and graphs or identifying patterns in data.

5. Scheduling – People often ask me to help them with scheduling tasks, such as setting up meetings or appointments.

Source: ChatGPT

As people become more adept at using AI prompts, the tools will be used in more advanced ways. This may include computer programming and the automation of work that currently requires human skill.

How companies can use generative AI

Organizations are already using generative AI on a larger scale too. This includes:

- Improving customer service: Generative AI can create personalized customer experiences by generating text, audio, and video content for customers.

- Making better use of data: Analyzing large amounts of data and seeing patterns that would be difficult to see. This information can improve decision-making and make better products and services.

- Improving operational efficiency: Automating tasks such as generating reports or creating marketing materials (gulp!).

- Enhancing employee productivity: Interact’s AI-powered intranet tools have several uses, one of them being a machine learning language checker. This tool offers writing suggestions to support intranet content creation.

The technologies are still in their early stages, but they have the potential to revolutionize many areas of work.

What are the risks of not having an AI usage policy?

Aside from the slim danger machines may become sentient and take over the earth, there are also some prosaic reasons organizations need to think about using AI in the workplace.

In a recent survey of senior IT leaders, for example, almost six in 10 (59%) questioned the accuracy of generative AI outputs. Almost two-thirds (63%) saw the potential for bias, such as misinformation or hate speech. Why is this?

First, artificial intelligence is not always correct. If a tool such as Gemini scans documents that aren’t accurate, it will return inaccurate results. Allowing all staff to use these tools without an AI usage policy could result in errors creeping into products, services, customer relationships, and onto your intranet software.

Secondly, generative AI platforms only use open-source data. Information that isn’t already on the internet or held within the system won’t be found. This may cause an over-reliance on limited information when more data exists internally or behind paywalls.

Thirdly, without oversight, there is a danger of bias and the generation of offensive content. According to Gemini:

“If you ask Gemini to generate a story about a certain group of people, it may generate a story that is offensive or harmful to that group of people.”

Finally, and perhaps most importantly for businesses, there is a potential threat to information security. Gemini states that the prompts and information provided by users are stored on Google’s servers and then may be used to train the system, or, somewhat nebulously, “for other purposes”.

If employees use private information about customers, prospects, or colleagues without guardrails, the potential for data security breaches increases.

Examples of companies with an AI usage policy

In the same way that organizations set and review social media policies, an AI usage policy is essential for awareness.

We spoke with some professionals who are implementing rules already.

The Insider’s Guide to Internal Comms Technology

According to Stewart Dunlop, Co-Founder at Linkbuilder.io, its “employees are prohibited from putting any protected or confidential information into search engines powered by artificial intelligence.”

Raising awareness of the new policy is important, so the company “decided on broadcast notifications sent out via email, and regular updates during weekly staff meetings held online over Zoom or Teams calls – whichever was more convenient for everybody involved.”

Similarly, Ben Lamarche of Lock Search Group believes, “it is necessary to implement regulations that limit the kind of information employees can input into AI tools. Employees are obligated to guard confidential information, which could otherwise be used be used by your competitors.”

Beyond data security, Lamarche also sees the need to codify intellectual property considerations from the beginning.

“It is also critical to draw up policies on the ownership of content employees create for the organization using AI tools. Having a written Employee AI Use Policy and embedding this in the employment contract is definitely the way to go as progress into the new era of machine learning.”

Ben Lamarche, Lock Search Group

Balancing security with innovation

At the performance management platform, Appraisd, CEO Roly Walter takes a balanced approach to AI usage.

“Although we see AI tools as an excellent opportunity to improve processes, we are concerned about people using AI tools, especially with regard to data privacy.”

To counter this, the AI usage policy at Appraisd gives staff specific user guidelines.

“Our guidelines state that you cannot experiment with real data – you must use ‘mocked’ data for testing. The guidelines are communicated during company weekly meetings – we accept that it’s a moving target and new guidelines are added as we go along. Generally, the guideline is to proceed with caution using fake data. Assume someone else can access what you type into a search engine unless the due diligence process has established that your data is private.”

Like other tech companies, however, Walter encourages innovation too.

“We encourage employees to demonstrate the ideas they’ve discovered using ChatGPT with each other. We hold an annual hackathon that aims to give all employees time to explore new tech like this. Recently we spoke about the possibility of integrating ChatGPT, and similar tools, at our last community event with clients about their feelings on AI and its potential and its risks.”

In addition to guidelines, some companies have already put more stringent measures in place. CEO and co-founder of Balance One Supplements, James Wilkinson suggests that his company is “in the process of putting in place a system for monitoring and auditing all AI search engine activity to detect and minimize any exposure of protected data as well as unauthorized use of generative AI tools that go against our use case policy.”

“Assume someone else can access what you type into a search engine unless the due diligence process has established that your data is private.”

Roly Walter, CEO, Appraisd

Acceptable Use Policy template

The policy prompts below offer some general guidelines for reference only.

These headings and suggestions do not take into account differences in international law or specific use cases in your organization. They are not a legal document and you should consult internal stakeholders in legal, HR, IT, and other functions.

You should also consult all relevant official agencies and advice in the creation of any such policy.

Purpose

The Purpose section of your AI Acceptable Use Policy (AUP) should state that the document is to establish guidelines and best practices for the responsible use of AI.

It may mention that the AI usage policy is to promote the innovative use of AI tools, while also minimizing the potential for intentional or unintentional misuse, unethical outcomes, potential biases, inaccuracy, and information security breaches.

Scope

The Scope typically references the range of staff, volunteers, contractors, and any individuals or organizations who may have access to and use AI in connection with or on behalf of your company.

The Insider’s Guide to Internal Comms Technology

An AI usage Scope may cover the use of AI tools for work tasks (in any location), but may also include private tasks undertaken using laptops or other equipment or logins provided by the company. It may reference the potential use of any data created or stored by your organization (e.g., remembering a customer’s email address and then using it out of hours). This is where the AUP may overlap with other data regulation guidelines and security processes within your company.

Policy

This section sets out the various best practices and guidelines employees should follow.

The following list is not exhaustive; Your policy should be a living, iterative document that you continually update and revise.

Here are some considerations.

Evaluation and approved usage

Some organizations will stipulate that employees must evaluate the security, terms of service, and privacy policies of AI tools. However, your IT department may have a process in place for this evaluation, so employees should check the approved software list and submit an IT request for accessing a new platform if required.

Compliance with security policies

Security policies apply to new tools as well as existing ones. To ensure the company continues to adhere to legislation (and qualify for security certifications) employees should use strong passwords, allow security updates, and follow relevant policies. This may include not sharing login credentials or other security information with third-party tools.

Data security and confidentiality

Employees must adhere to data protection policies when using generative AI. As was mentioned above, in many cases the data shared with AI could be public. Sharing information about customers or fellow employees could constitute a significant breach of data regulations. Sensitive or personal data should be ‘mocked up’ by being anonymized or encrypted.

The policy should contain a process for reporting data breaches in line with your company’s data protection guidelines.

Appropriate use

AI tools have their own appropriate use policies that employees should check and follow. Using AI to create harmful, offensive, or discriminatory content, for example, may not be just a violation of terms of service, but an illegal act.

Companies should advise and train staff so this does not happen (intentionally or unintentionally) on company time and using company equipment.

It is also important to outline how AI tools will be considered as part of the company’s other policies on discrimination, privacy, or inappropriate behavior, for example.

Identifying and mitigating bias

We have already established that AI outputs are potentially biased (depending on the information the system is trained on). If employees and the organization at large are going to use these AI outputs as part of their work, how will they mitigate these biases?

Suggestions may include regular reviews of outputs by diverse groups of stakeholders, guidance and training on algorithmic biases, and collaboration with AI platform vendors to reduce bias.

There may also be a crossover with DE&I policies on this point as there is a potential risk for AI tools to generate discriminatory or offensive content.

Verifying accuracy

One of the problems with word-based AI tools such as ChatGPT and Gemini is that when asked for statistics, they often do not give sources. As such, it may be hard to verify where information has come from and how accurate it is.

Employees should review information gleaned from AI tools before using it. Any facts, data, or insights should be cross-checked against reliable sources to ensure accuracy and avoid misinformation.

Transparency

Do people have a right to know if content has been generated by AI?

In some circumstances this will be irrelevant, but in many areas of work this is an important question. If your design agency or advertising firm relies on generative AI that does the work in half the time as a human, but still charges for the same hours, do your clients have a right to know?

Similarly, do consumers want to take legal or financial advice that has been generated by an AI tool and then sent to them as human advice?

This section should detail the guidelines for what disclosures need to be made.

Training

Will your AI AUP include a training element? It may not be legally mandated yet, but for companies in highly regulated or advisory sectors especially, instituting training and awareness programs may be desireable.

This section may contain details of the frequency and delivery methods of training sessions or resources. As AI is a rapidly developing field, you may also want to appoint an internal champion whose responsibility it is to monitor and update the training.

Intellectual property

In line with international and local laws (and company guidelines), employees must respect intellectual property rights (IPRs). Using AI tools in a way that plagiarizes content or infringes IPRs should be addressed in your AUP.

As mentioned earlier, consideration should also be given to whether you will require attribution to AI-generated content.

Reporting misuse or concerns

Employees should be encouraged, and have channels, to report misuse or concerns about the inappropriate use of AI tools.

Statement of acceptance

It is important for all staff to read and understand the policies set out by the company. A statement of acceptance may reinforce the responsibilities of each employee and remind them of their duties with regard to AI platforms.

This section may also outline how the company monitors acceptance. The Interact platform offers a “Mandatory Read” system where all staff are notified of changes to policies and then must indicate they have read and understood the document. This is important for compliance purposes.

Alternatively, you may ask the employee to sign and date the policy.

Disclaimer: The Acceptable usage policy suggestions here are advisory and not comprehensive. Neither the author nor Interact will assume any legal liability that may arise from the use of this policy.

The feature image on this page comes from Image by rawpixel.com on Freepik.